PRISM Data QC

The quality of your input data directly affects the accuracy and reliability of the insights or results derived from it.

One the most important lessons an analyst learns is how to thoroughly check a data set for issues arising from such things as well condition, well deviation and tool performance.

PRISM Data QC, using heuristics and hierarchical statistical methods, highlights depth ranges in your data which could impact your ability to answer the survey objectives.

An automatically generated report flagging any zones of concern is an invaluable place to start when you begin processing multi-finger caliper data.

Centralisation is crucial for accurate calculation of penetrations to the tubing wall and often involves a balanced approach to avoid excessive processing time on one aspect of the processing workflow.

Without PRISM Centralisation, the analyst would select one mode of centralisation for the whole survey, quality check their effort before deciding whether to proceed with the rest of the analysis or perhaps try other methods.

If one mode does not work throughout the whole data set splicing several methods of centralisation together is also a possibility when working without PRISM Centralisation. This takes more time and introduces more room for user errors.

In surveys, contain high inner radius and low diameter features (e.g., metal loss and deposition) centralisation using all radial values can result in processing artefacts being introduced, which need to be explained away in a report.

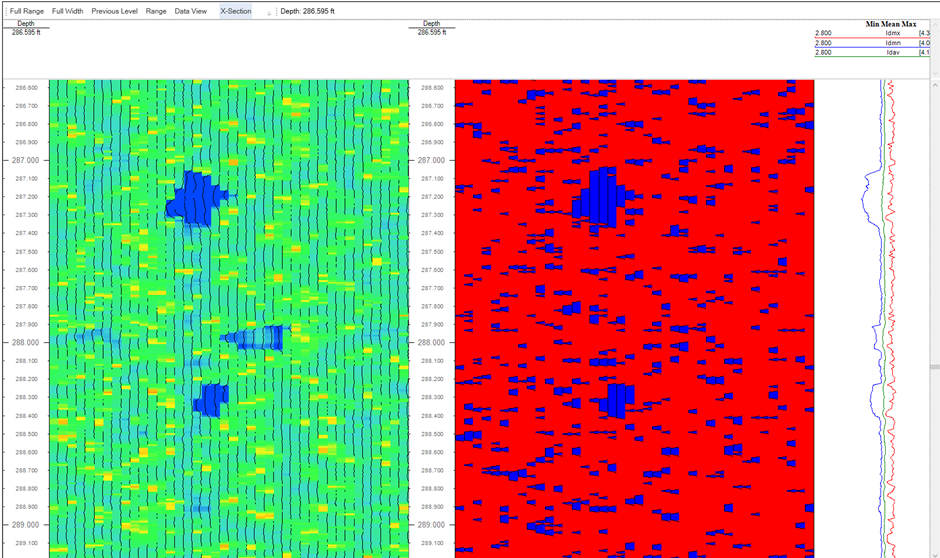

PRISM Centralisation scans every depth frame of the data set making the best choice of centralisation method throughout the caliper survey. This is a process that would be impossible for a human to replicate.

Left hand side is the multi finger caliper data. Right hand side if a map that displays the data from each arm that is being excluded to get the best centralisation result.

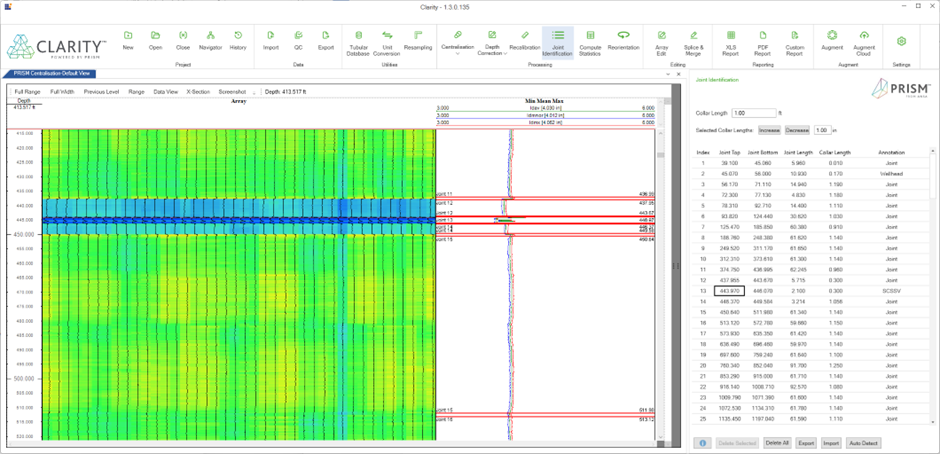

The task of joint detection can take hours with alternative software, now takes minutes with PRISM.

It takes just one click to find and exclude connections from the survey results. Other software requires the user to define detection parameters to help detect the connections, which adds time to the overall process.

To enable calculation and reporting of statistical values based on the recorded data and tubular nominal dimensions, analysts can now use parameter-free PRISM Joint detection.

Machine learning allows users to isolate tubing or casing connections which could otherwise report as misleading metal loss features, making the analysis of the tubing body harder.

Right hand table is the auto detected joint tops and bottoms, completion items are also detected and (some of them) are named by Clarity.

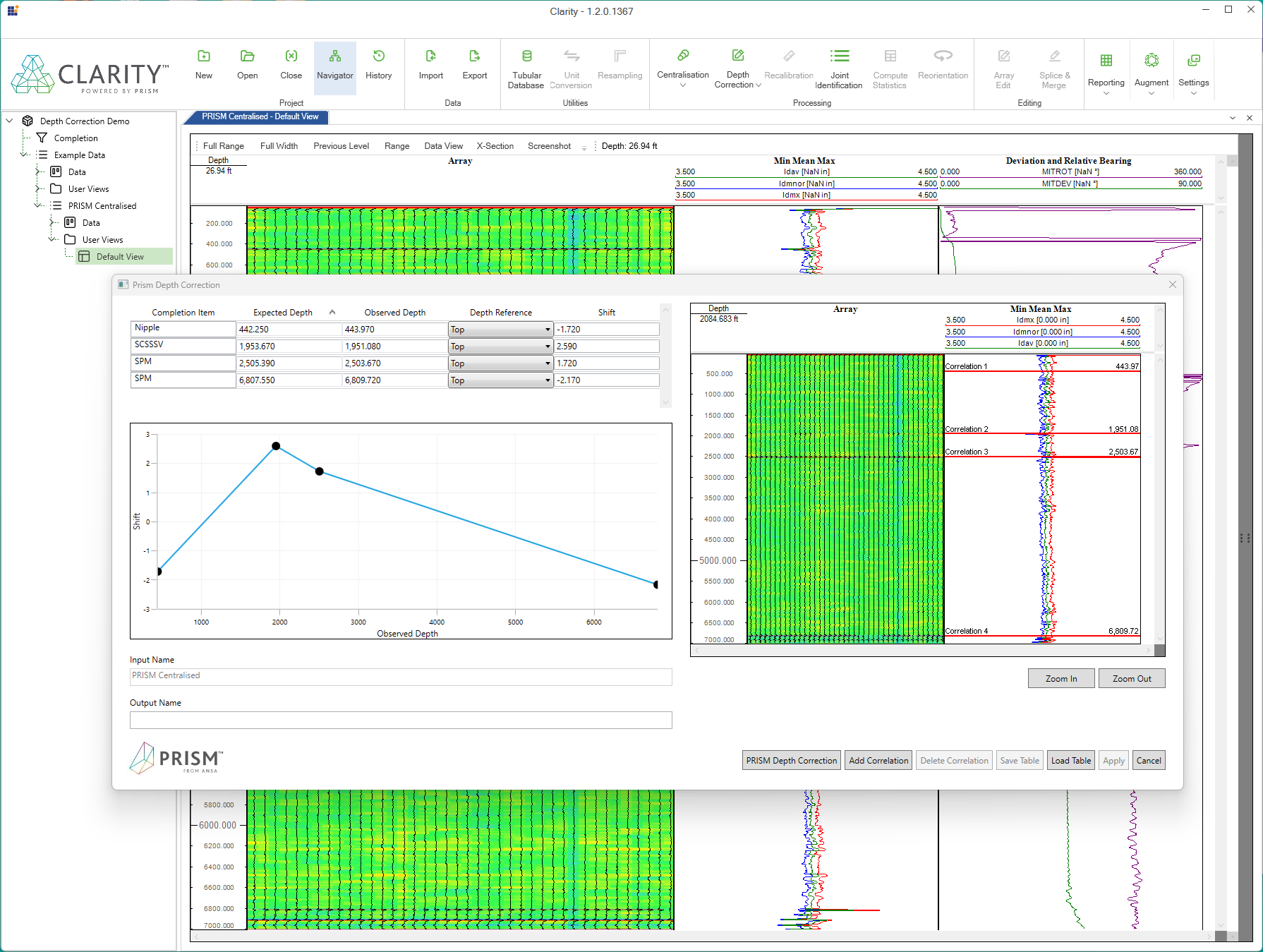

Matching the survey depth record with a reference document such a well schematic is essential for several reasons. It allows comparison of dissimilar surveys, the same survey type in the same well across time and to inform depth correlations on future well interventions using the same conveyance.

To do complete depth correction, the analyst must spend time finding completion items (e.g., SSVs, SSDs) in a well schematic or tubing/casing tally and in the survey data so they can return the data to schematic quoted depths.

Combining three powerful modules (Downhole Item Identification, Joint Identification, Assisted Depth Correction) you can now have Clarity™ scan the data and suggest to you the required depth correction. This replaces the manual process of scrolling through the data, matching the acquired depths with the reference depth and calculating the depth corrections.

The well schematic is read by PRISM Assisted Depth Correction and the reference depths of completion items such as safety valves, gas lift mandrels, tubing crossover etc. are extracted.

PRISM Downhole Item Identification automatically finds all the completion items in the survey data. It reports the depths of these items, which gives an analyst the input and output depths, allowing Clarity™ to report the depth correction pairs that are required to shift the data to the depth reference.

Top left is completion information entered by the user paired with those completion items found in the data set by Clarity. Right hand side is an image of the caliper data with a red adjustable marker at each depth correction point.

Several downhole forces can lead to deformation of the tubing or casing: mechanical stress during handling and installation, formation movement, pressure and temperature differentials and cyclic loading and unloading are some of these causes.

The presence of deformation is processed out whilst correcting for decentralisation of the toolstring. Therefore, the analyst has to inspect the raw data for indications of pipe deformation.

PRISM Deformation Analysis inspects the data for recognisable responses to automatically highlight deformed pipe and reports on restriction diameters and maximum drift lengths.

Comparing multifinger data sets in the same tubing or casing over time gives the well owner valuable information: The comparison helps to identify changes in deformation, wear and corrosion features that occurred between the two surveys allowing the operator to plan for maintenance and repairs.

The two data sets must be on depth with each and the reference. Processing steps used in each data set should be confirmed to ensure that no unwarranted edits have been applied. If the analyst is presented with two processed data sets with no history they may have to assume no change at particular down hole items and recalibrate the data set to allow a comparison.

This module helps Clarity automatically detect completion items and connections and puts the two data sets on depth with each other. If Clarity was used to process both data sets then the processing history will be analysed to ensure that similar methodology was applied.

Statistical values to describe the evolution of any loss features, deformation or deposition can then be created. The addition of several charts and tables to illustrate the changes over time are then available to be added to the custom reporting stage.

Occasionally, the analyst will be presented with a survey where the recorded values will have been altered due an external factor: perhaps high well temperatures and a lack of temperature compensation for the tool, or the hardened fingertips have worn completely, in which case the metal of the arm can wear quickly, causing the radius values from this arm to be higher than expected. At times, it might also be necessary to replicate processing methods from an older survey to allow a time lapse comparison.

Experienced analysts can create the required shift values from the correct log intervals to adjust for these effects. However, it’s a time consuming process. PRISM Recalibration will allow all user to reach the same results within seconds

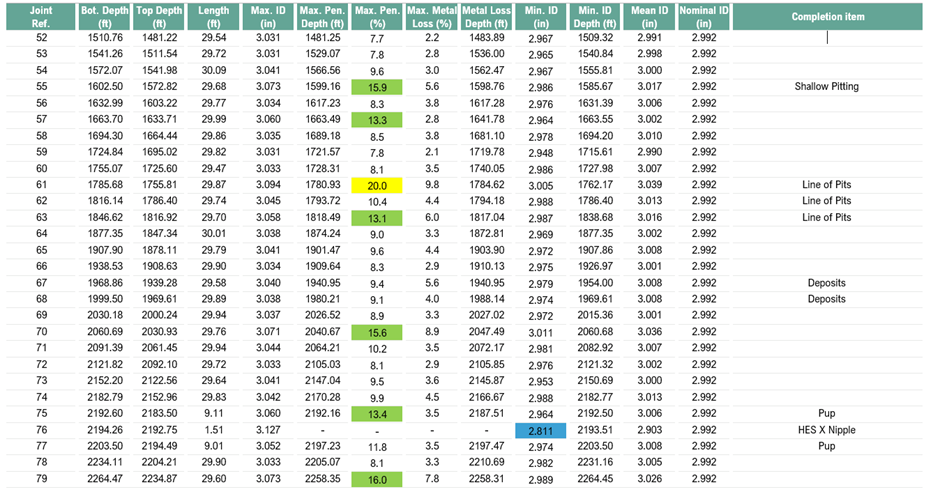

It takes an analyst time and experience to be able to take a correctly processed data set and describe the visual representation of the features to the audience in a timely manner.

Being able to maximise your time and deliver maximum value to your customers by prioritising which features to describe in text and images in your report is important. Quite often, a compromise is reached as not every single feature will be described in the same level of detail.

For each joint and for each identifiably unique feature Clarity provides descriptive terms to let the audience understand exactly what is happening at every point in their well, differentiating between wear, corrosion, deformation, and deposition at every depth. With Clarity there is no need to compromise by only describing the maximum penetration value when Clarity™ can describe as many as you want to include in your report.

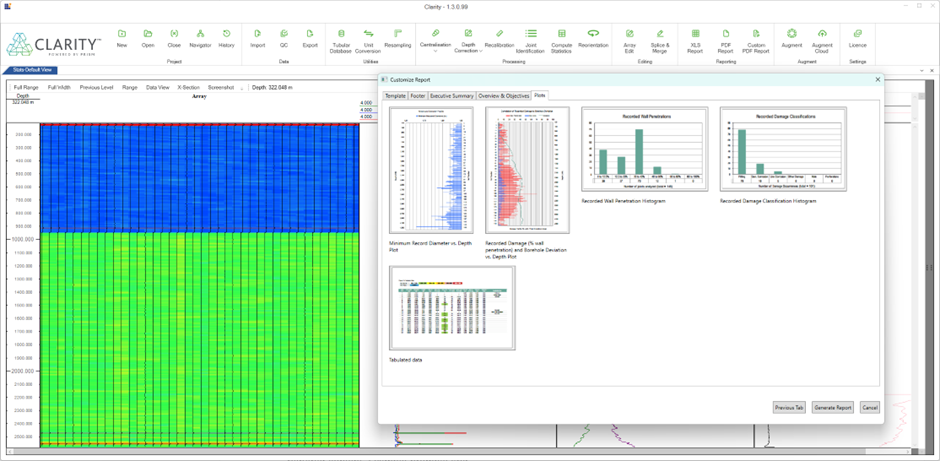

When you need to present your findings, you also must lay out the text, graphics, charts, and tables in your own branded material that is both easy to comprehend and sets your product apart.

Up until now automatic reporting has been limited to using a basic, white label style output. With Clarity you can use your own branded Microsoft Office document as a template which is set up with ‘mail merge’ style prompts for Clarity to automatically populate the report with the required content. Example prompts could be: –

If you already have a Microsoft document template ANSA can work with you to insert the prompts so that you can get up and running as quickly as possible.

Version one of Customised Reporting is only the beginning, get in on the ground level now to have your say in what we add next. We have some exciting ideas…

Clarity contains ‘hands-on’ versions of all PRISM functions to allow you to take control of each processing step. It also includes various utilities that might not be included in PRISM modules to allow full processing of a multifinger caliper data set.

Import your data in the widely accepted .LAS and .DLIS formats. Export your processed data for delivery to your customer in LAS format.

In an ideal processing workflow the imported log values will be defined by the correct unit of measurement. If not you can convert, any log value with a defined unit can be converted into another unit of measurement.

It is recommended to work with multifinger caliper data at the maximum acquired resolution to capture the most value from the survey. However, when working with data at different sample rates, or to alter the volume of data, you can up sample or down sample as required.

As opposed to the PRISM method, where Clarity makes the best choices at every depth frame, you can also make your own choices manually. The outlier count, the geometric fitting and method of excluding outlier values can be edited. You can also zone your choices differently throughout the survey to account for changes in well condition.

To complete manual depth correction the user can enter pairs of depths to shift the data as required to either a reference document or curve.

Rather than allowing PRISM to identify effects such as temperature drift or finger wear the user can take control. The user can define the zones of data which will be used to create shift values and the method of calculating the shift values.

A useful feature which ‘rotates’ the data so that the centre of the multi-arm track contains the data representing the high side of the pipe. Helpful when visualising features which are present towards a certain part of the pipe and tool rotation makes it slightly harder to visualise.

Manual edits of data will always be a useful tool to remove values from the data set. When the arms of the tool are damaged or clogged, these high or low values will obscure the reporting of remaining useful high and low values.

Clarity offers several methods of replacement, so the user can decide on the most suitable technique to substitute the erroneous or misleading values.

Various situations such as when you need to import a reference Gamma Ray or merge together two logging runs will require you to splice and merge files together.

After importing the data into Clarity you can choose which curves at selected intervals you want to be present in your output file.

The goal of processing is to have the best data available to allow the creation of new calculated curves which enhance the analysis and interpretation of your data.

Clarity adds numerous values, such as penetration percentage, minimum diameter to the project file, available to export to LAS and to use in the joint by joint report later in the reporting workflow.

All user interactions with Clarity processing modules are logged and then available to inspect and export at the end of processing.

This allows the end user to inspect all edits, creates an audit report for future inspection and assists is troubleshooting for future users opening the same project.

Back to

the Top

ANSA Customer Support

+44 (0) 1224 336624 sales@ansa-data.com

Aberdeen Data Hub

Viking House, 1 Claymore Avenue, Aberdeen Energy Park AB23 8GW. UK

Houston Data Hub

Suite 330 9940 W. Sam Houston Parkway S. Houston, Texas 77099. USA